Even as artificial intelligence (AI) has become more commonplace and relied upon by businesses in different industries, it still faces criticism on whether it can be implemented in a safe and ethical manner and, related, how the bias often inherent in the underlying algorithms can be detected and reduced.

Even as artificial intelligence (AI) has become more commonplace and relied upon by businesses in different industries, it still faces criticism on whether it can be implemented in a safe and ethical manner and, related, how the bias often inherent in the underlying algorithms can be detected and reduced.

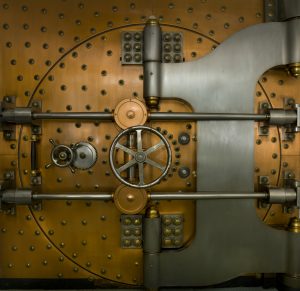

Enter the Black Box Problem. In general, a “black box” is a device, system or program that allows a user to see the input and output of a computing system without there being a clear view into the processes and workings in between. In the context of AI, the Black Box Problem refers to the fact that computing systems used to solve problems in AI and enable machine learning are opaque. More specifically, the problem can be defined as an inability to fully understand an AI’s decision-making process and the inability to predict the AI’s decisions or outputs. In some cases, this opacity is intentional to protect against reverse engineering that could reveal trade secrets within the AI algorithm or allow an individual to hack the algorithm. However, with machine learning, the Black Box Problem can also arise when data is ingested and combined by an algorithm to provide output in a way that may not be readily determined even by those who designed the algorithm. Even if one has a list of the input variables, black box predictive models can be based on such complicated functions of the variables that no human can understand how the variables are jointly related to each other to reach a final prediction.

Given the risks of the Black Box Problem, a strategy that companies may want to consider is implementing a “weak” black box for AI tools. While a weak black box may still employ decision-making processes that are opaque to humans, they can be reverse engineered or probed to glean information on the importance and role of the variables relied upon by the AI. In this way, weak black boxes may allow a limited ability to predict how the model will make its decisions and thus better test for potential biases and decisional faults. In contrast, a “strong” black box completely shields an AI’s decision-making processes such that there is no meaningful way to determine how the AI arrived at a decision or prediction—no way to determine what information is outcome determinative to the AI or how the variables processed by the AI are ranked in the order of their importance.

Granted, a “weak” black box is not without its own drawbacks. AI designers may be forced to use shallow or less complex architecture in order to increase transparency, which may result in poorer performance. While performance sacrifices are hardly ideal, this may be the only viable way at the moment to satisfy concerns about ethically using AI and allowing common legal doctrines like intent and causation, which are focused on human conduct, to better apply to AI decisions and allow for tort and liability laws to more aptly regulate AI.

Regardless of where a company comes down regarding the transparency of the algorithms upon which they rely, there remain some simple steps companies can take to be better prepared to answer the black box question:

- Establish controls for your company by creating checks for data gathering, preparation, model selection, training, evaluation, validation and deployment;

- Develop AI risk management strategies and policies, and identify those whose responsibilities in the company are to oversee compliance with these policies;

- Have independent auditors review black box algorithms to assess the validity of the training datasets and monitor the outcomes from the algorithms to ensure optimal performance without any unintended bias; and

- Develop and implement internal surveillance steps to monitor and understand how the AI is performing.

Ultimately, the question of whether the benefits of weak black box AI outweigh those of more robust but opaque AI is a nuanced one made up of a host of shifting variables—including developing laws and regulations, consumer perceptions and media attention—but considering and answering that question should be a recurring item for company leadership (or legal teams). Just as a company’s business plan and risk profile evolves, so, too, will the value proposition of weak versus strong AI.

RELATED ARTICLES

The FTC Urges Companies to Confront Potential AI Bias … or Else

Internet & Social Media Law Blog

Internet & Social Media Law Blog